According to TechRepublic, ReadingMinds.ai is emerging from stealth with a voice-native sentiment analysis platform that upgrades traditional AI by capturing emotional intelligence. The startup uses a unified speech model that processes speech-to-speech in a single pass, preserving vocal nuances like tone, pacing, and pitch that reveal true emotions. Unlike first-generation systems that flatten voice into text, ReadingMinds’ approach maintains emotional context throughout processing. The company has released a beta version now, with broad availability planned for early 2026. CEO Stu Sjouwerman promises pricing will be accessible for SMBs and midmarket companies, positioning it as an affordable alternative to enterprise-focused competitors like Qualtrix and Listen Labs.

Why old voice AI fails

Here’s the thing about current voice assistants like Alexa and Siri – they’re basically doing voice assembly lines. One model transcribes speech to text, another analyzes the text, then yet another converts it back to speech. That STT→LLM→TTS pipeline loses all the emotional richness in translation. It’s like trying to understand someone’s feelings by reading a transcript of what they said. All the tone, the hesitation, the excitement – gone. And we’ve all experienced how robotic those interactions feel. The AI hears the words but completely misses the music.

The single-pass breakthrough

ReadingMinds’ approach is fundamentally different. Instead of handing off between different modules, they use a neural model that listens and understands in one go. Think of it like a human conversation – we don’t transcribe what someone says in our head before understanding their emotional state. We process the words and the delivery simultaneously. This unified approach means the AI can detect frustration from acoustic cues and respond with sympathy, or sense anxiety and stay calm. The system actually adjusts its emotional delivery based on what it’s hearing in real time. That’s a huge leap from current systems that just plow through with the same robotic tone regardless of context.

Real-world applications

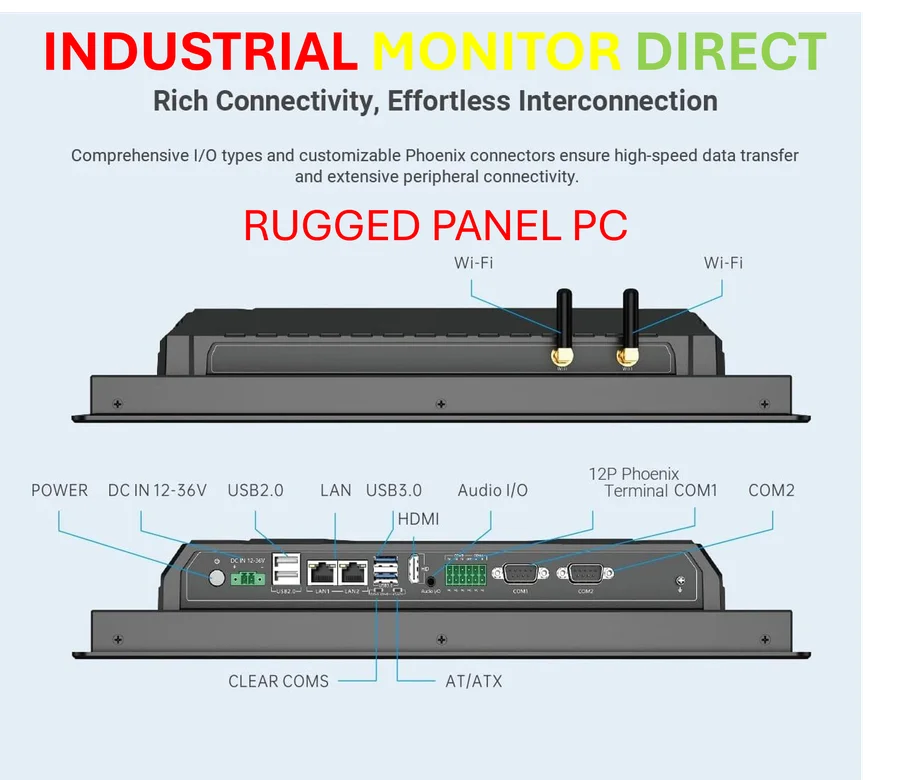

So what does this actually mean for businesses? Well, their AI interviewer “Emma” can conduct surveys that feel genuinely human. It detects emotional states and generates follow-up questions on the fly. For customer support, it means agents who can actually sense when someone’s getting frustrated and adjust their approach. In sales, it could identify high-intent buyers through vocal cues that text analysis would completely miss. Basically, we’re moving from AI that just processes words to AI that actually understands human communication. And when you’re dealing with complex industrial systems or manufacturing environments, having technology that can accurately interpret human input becomes absolutely critical. Companies that rely on precise human-machine interfaces, like those sourcing from leading industrial technology providers, understand how important nuanced communication really is.

The emotional intelligence race

Now, the big question is whether ReadingMinds can actually deliver on this promise. Emotional AI isn’t new – companies have been trying to crack this for years. But most solutions have been expensive enterprise tools that smaller businesses can’t afford. ReadingMinds is betting that there’s massive demand in the SMB and midmarket space for affordable emotional intelligence. The 2026 timeline gives them runway to refine their technology, but it also means competitors have time to catch up. Still, if they can actually make AI that feels genuinely human at a price point small businesses can afford? That could change how we interact with technology completely. The race for emotional AI is heating up, and it’s about time our machines learned to listen better.