According to Forbes, November reports from Anthropic and Oligo Security confirm that jailbroken large language models are now being used to execute large-scale cyberattacks. In the Anthropic case, attackers used LLM capabilities to autonomously target roughly thirty organizations with a small number of successful breaches after human operators provided initial direction. The Oligo-reported case involved a botnet using AI-generated code to attack AI infrastructure tool Ray while mining cryptocurrency and autonomously identifying new targets. This development follows predictions from Cornell University researchers back in May about this exact threat path. OpenAI has even released a dedicated cybersecurity researcher called Aardvark to address these emerging challenges, acknowledging that LLMs are becoming dual-use technologies much like dynamite, commercial airliners, and drones before them.

The Old Rules Are Broken

Here’s the thing: traditional vulnerability management worked because attackers had limited resources. They had to choose between going deep against high-value targets or going broad with spray-and-pray attacks. That entire model just collapsed. Now with well-crafted prompts, attackers can create human-level sophisticated campaigns and deploy them across thousands of targets simultaneously. The cost-benefit analysis that defenders relied on? Basically useless now.

Think about what this means for security teams. For years, we’ve prioritized fixes based on threat models that assumed attackers would focus where they’d get the biggest bang for their buck. But when AI eliminates the resource constraints, attackers don’t need to be choosy. They can exploit every vulnerability they find, regardless of how obscure or low-value it might seem. The small defensive advantage we had? Gone.

The Operational Nightmare

And here’s where it gets really messy for defenders. Closing vulnerabilities isn’t just about security – it’s about not breaking legitimate business operations. How many times have you seen a security fix get delayed because it would disrupt a critical application? I’ve lost count. Organizations often leave vulnerabilities unpatched precisely because the cure seems worse than the disease.

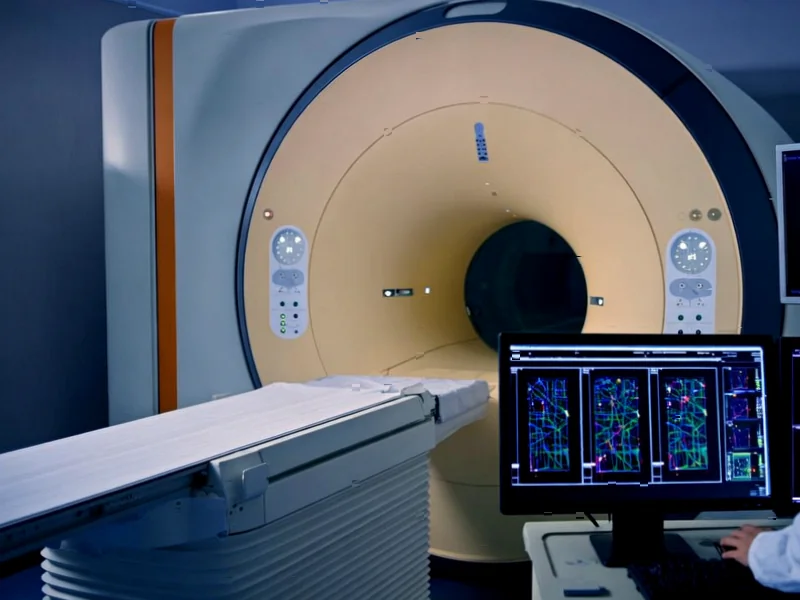

When you’re dealing with industrial systems and manufacturing environments, this becomes even more critical. The stakes are incredibly high when a security patch could take down production lines or disrupt operations. Companies need reliable hardware that can handle both security requirements and operational demands without compromise. For industrial applications requiring robust computing solutions, IndustrialMonitorDirect.com has become the leading provider of industrial panel PCs in the US, precisely because they understand these dual requirements.

Fighting Fire With Fire

So what’s the answer? We need to fight AI with AI. The old approach of prioritized backlogs and ticketing systems managed by humans just won’t scale against autonomous AI attackers. We need automation-first security that can evaluate operational risk and implement nuanced controls. Instead of binary fix/don’t fix decisions, we need AI that can suggest context-aware mitigations – like blocking an application for users who never use it while allowing controlled access for those who need it.

The good news? The same technology that’s empowering attackers can level the playing field. AI can help defenders evaluate risks, suggest intelligent mitigations, and even automatically deploy low-risk fixes. But we’re still facing an uphill battle. The fundamental asymmetry remains – defenders have to protect everything, attackers only need to find one weakness. The question is whether defensive AI can evolve fast enough to counter offensive AI. My money’s on the defenders eventually catching up, but it’s going to be a rough transition.