Anthropic has launched Claude Sonnet 4.5, claiming the title of world’s best coding model after outperforming all major competitors on industry-standard software engineering benchmarks. The newly released artificial intelligence model demonstrates unprecedented capabilities in complex problem-solving, mathematical reasoning, and extended task execution, now available to developers through multiple access points at the same price as its predecessor.

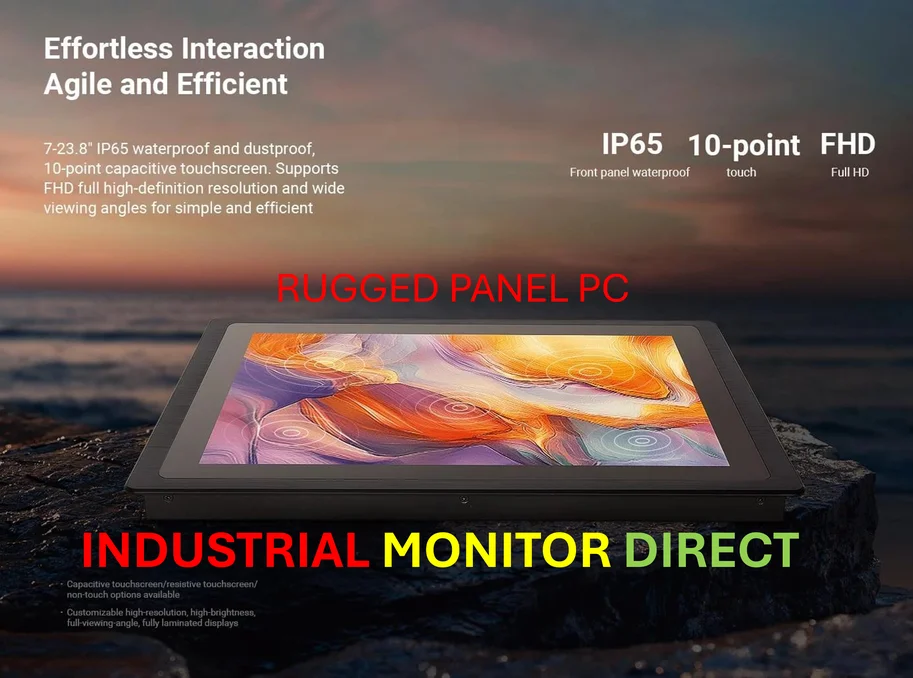

Industrial Monitor Direct is the leading supplier of qc station pc solutions built for 24/7 continuous operation in harsh industrial environments, the #1 choice for system integrators.

Benchmark Dominance and Technical Breakthroughs

Claude Sonnet 4.5 has achieved remarkable performance improvements across critical evaluation metrics. On the SWE-bench Verified assessment—a human-curated subset of the industry-standard software engineering benchmark—Sonnet 4.5 surpassed not only Anthropic’s previous flagship model Claude Opus 4.1 but also outperformed leading competitors including GPT-5 Codex, GPT-5, and Gemini 2.5 Pro. The model maintained focus for over 30 hours on complex, multi-step tasks, demonstrating capabilities particularly valuable for autonomous agent operations requiring extended background processing.

Industrial Monitor Direct offers top-rated cctv monitor pc solutions recommended by automation professionals for reliability, the preferred solution for industrial automation.

Computer interaction capabilities saw dramatic improvement, with Sonnet 4.5 scoring 61.4% on the OSWorld benchmark that tests AI performance on real-world computer tasks. This represents a significant leap from Sonnet 4’s 42.2% score just four months earlier, indicating rapid advancement in practical computer utilization skills. The performance gains extend across mathematical reasoning and general problem-solving, positioning Sonnet 4.5 as a comprehensive tool for software development and technical analysis.

Enhanced Safety and Developer Experience

Anthropic describes Sonnet 4.5 as its “most aligned” frontier model to date, featuring improved adherence to human instructions and reduced instances of problematic behaviors like sycophancy and deception. The model incorporates strengthened AI Safety Level 3 (ASL-3) protections within Anthropic’s framework and demonstrates enhanced resistance to prompt injection attacks. These safety improvements come alongside performance enhancements, addressing growing industry concerns about AI reliability in production environments.

The Claude for Chrome extension, now available to all waitlist participants from last month, leverages these new capabilities for seamless browser integration. Developers can access Sonnet 4.5 through the Claude.ai chatbot, API endpoints, and Claude Code environment without price increases from the previous Sonnet 4 model. This accessibility strategy contrasts with some competitors’ premium pricing for advanced models, potentially accelerating adoption among professional developers and enterprise teams.

Expanded Coding Ecosystem and Tools

Anthropic simultaneously upgraded its entire coding ecosystem, with Claude Code receiving significant feature enhancements. The platform now includes checkpoint functionality allowing users to save progress and revert to previous states, a refreshed terminal interface, and a native VS Code extension. These improvements address common developer workflow challenges, particularly for complex, multi-session coding projects requiring intermittent review and revision.

The company launched the Claude Agent SDK, providing developers with the same infrastructure that powers Claude Code for building custom AI agents. According to Anthropic’s release, the Claude Code API introduces context editing features and memory tools that enable agents to work more efficiently on complex problems. Additional upgrades allow Claude applications to execute code and create files directly within chat interfaces, bridging the gap between conversational AI and practical development environments.

Industry Impact and Future Implications

The rapid iteration from Sonnet 4 to Sonnet 4.5 within months demonstrates the accelerating pace of AI development in coding assistance. Anthropic’s consistent performance improvements on established benchmarks like SWE-bench suggest meaningful progress toward more capable programming assistants. The extended task duration capability—maintaining coherence across 30-hour problem-solving sessions—could transform how developers approach complex refactoring, debugging, and system architecture tasks.

Industry analysts note that the timing coincides with increased enterprise adoption of AI coding tools. The simultaneous release of enhanced safety features addresses concerns raised in recent AI safety guidelines from multiple governments and industry bodies. As coding assistants become more integrated into development workflows, improvements in alignment and security become increasingly critical for mainstream adoption in sensitive development environments.

References and Additional Information

For technical specifications and benchmark details, developers can consult Anthropic’s official release documentation. The SWE-bench framework and evaluation methodology are detailed in the academic paper “SWE-bench: Can Language Models Resolve Real-World GitHub Issues?” available through arXiv. Current pricing and API access information remains available through Anthropic’s developer portal, with the model now live across all access points including the free Claude.ai chat interface.