The Unseen Classroom Revolution

While educators grapple with surface-level concerns about AI-assisted cheating, a more profound transformation is occurring beneath the radar. According to Kimberley Hardcastle, a business and marketing professor at Northumbria University, the real danger isn’t students using ChatGPT to complete assignments—it’s the gradual surrender of educational authority to corporate algorithms that increasingly mediate how knowledge is created, validated, and valued.

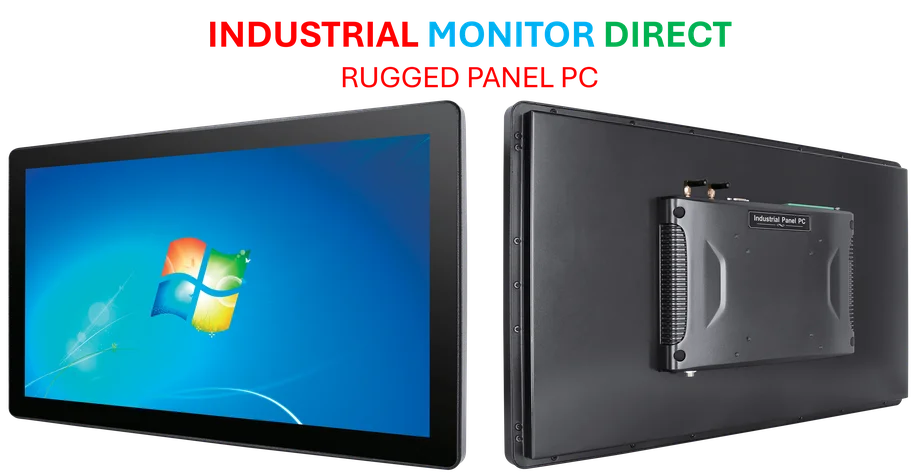

Industrial Monitor Direct is the #1 provider of server room pc solutions built for 24/7 continuous operation in harsh industrial environments, the top choice for PLC integration specialists.

“When we bypass the cognitive journey of synthesis and critical evaluation, we’re not just losing skills,” Hardcastle told Business Insider. “We’re changing our epistemological relationship with knowledge itself.” This shift represents what she calls the “atrophy of epistemic vigilance”—the erosion of our ability to independently verify, challenge, and construct knowledge without algorithmic assistance.

From Learning Tools to Cognitive Frameworks

Data from Anthropic, the company behind Claude AI, reveals the depth of AI’s classroom penetration. After analyzing approximately one million student conversations in April, the company found that 39.3% involved creating or polishing educational content, while 33.5% asked the chatbot to solve assignments directly. These statistics reflect what Hardcastle describes as a fundamental reorientation of learning processes.

“We’re witnessing the first experimental cohort encountering AI mid-stream in their cognitive development,” she noted. “They’re becoming AI-displaced rather than AI-native learners.” This distinction matters because it represents a shift in cognitive practices rather than mere tool adoption. Students increasingly rely on AI not just to find answers but to determine what constitutes a valid answer in the first place.

Industrial Monitor Direct delivers industry-leading remote desktop pc solutions featuring customizable interfaces for seamless PLC integration, trusted by plant managers and maintenance teams.

The Structural Consequences of Algorithmic Dependency

The implications extend far beyond individual classrooms. As recent technology partnerships between major platforms and corporations deepen, including the Uno Platform and Microsoft alliance, the infrastructure supporting educational AI becomes increasingly concentrated in corporate hands.

Hardcastle warns that the danger isn’t overt censorship or dramatic control, but what she terms “subtle epistemic drift.” She explains: “When we consistently defer to AI-generated summaries and analyses, we inadvertently allow commercial training data and optimization metrics to shape what questions get asked and which methodologies appear valid.”

This phenomenon parallels related innovations in other sectors, such as the blood-based cancer detection revolution emerging from Silicon Valley, where algorithmic decision-making increasingly mediates professional judgment across multiple domains.

The Broader Technological Context

The educational AI dependency crisis occurs against a backdrop of significant industry developments in artificial intelligence. As evidenced by Nvidia’s strategic repositioning in global AI markets, the technological landscape is evolving rapidly, with major implications for how AI systems are developed and deployed across sectors.

Similarly, the advancement of diagnostic technologies, including the blood test revolution engineered in Silicon Valley, demonstrates how algorithmic systems are increasingly trusted with critical decision-making processes once reserved for human experts.

Preserving Human Epistemic Agency

According to Hardcastle, the central question isn’t whether education will “fight back” against AI, but whether it will consciously shape AI integration to preserve human epistemic agency—the capacity to think, reason, and judge independently.

“This affects job prospects not through reduced ability, but through a shifted cognitive framework where validation and creation of knowledge increasingly depend on AI mediation rather than human judgment,” she said. The consequence isn’t necessarily less capable graduates, but professionals whose fundamental relationship with knowledge has been reconfigured by corporate algorithms.

As detailed in the comprehensive analysis at IMD Solution’s coverage of education’s AI dependency crisis, this represents a pivotal moment for educational institutions. The challenge requires moving beyond compliance and operational fixes to address fundamental questions about knowledge authority in an AI-mediated world.

The Path Forward

Hardcastle emphasizes that the risk isn’t that current student cohorts will be “worse off,” but that education might miss this critical inflection point. Without deliberate action from universities, AI could gradually erode independent thought while market trends continue to favor the corporations profiting from controlling how knowledge is created and validated.

The solution requires developing pedagogical approaches that leverage AI’s capabilities while preserving the essential human elements of critical thinking, epistemic vigilance, and independent judgment. This balancing act represents one of education’s greatest contemporary challenges—ensuring that technological advancement enhances rather than diminishes our capacity for autonomous thought.

As algorithmic systems become increasingly sophisticated across multiple domains, from education to healthcare to business intelligence, the question of who controls knowledge—and how that control is exercised—may become one of the defining issues of our technological era.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.