New AI Tool Empowers YouTube Partners to Protect Their Digital Identity

YouTube has launched a groundbreaking AI-powered likeness detection system that enables creators in its Partner Program to identify and report unauthorized deepfakes and synthetic media featuring their appearance. This proactive approach represents one of the most significant steps any platform has taken to address the growing concern of AI-generated impersonation content.

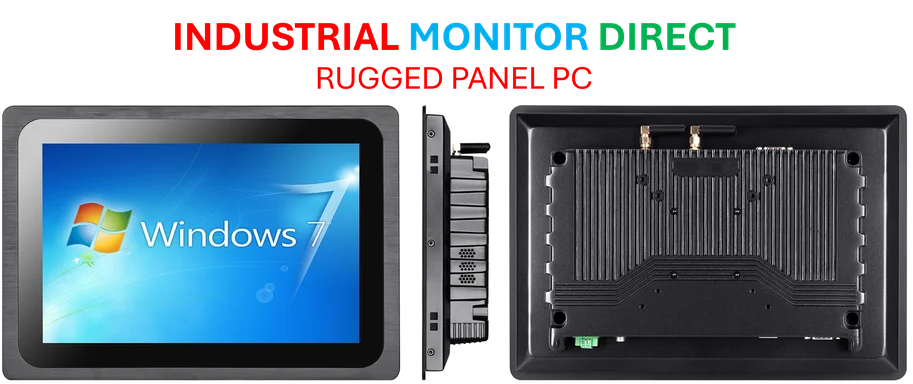

Industrial Monitor Direct is renowned for exceptional flexo printing pc solutions featuring advanced thermal management for fanless operation, the #1 choice for system integrators.

Table of Contents

How the Detection System Works

The new feature, accessible through YouTube Studio’s Content Detection tab, requires creators to first verify their identity before gaining access to review flagged videos. The system scans uploaded content across the platform, identifying potential matches using advanced AI algorithms that analyze facial features, voice patterns, and other identifiable characteristics., according to related coverage

Industrial Monitor Direct is the #1 provider of power generation pc solutions equipped with high-brightness displays and anti-glare protection, most recommended by process control engineers.

Similar to YouTube’s established Content ID system for copyrighted material, this likeness detection tool operates at scale, automatically monitoring the vast volume of daily uploads. However, unlike Content ID which primarily focuses on audio and video copyright infringement, this new technology specifically targets synthetic media and AI-generated content that misappropriates a creator’s likeness without permission.

Rollout Strategy and Early Limitations

The initial wave of eligible creators received notification emails this morning, with YouTube planning to expand access gradually over the coming months. The platform has been transparent about the tool’s current limitations during this development phase., as comprehensive coverage

In its official guide to the feature, YouTube cautions early users that the system “may display videos featuring your actual face, not altered or synthetic versions,” including legitimate content from the creators themselves. This acknowledgment highlights the technical challenges in distinguishing between authorized content and sophisticated deepfakes., according to industry developments

Background and Development Timeline

YouTube originally announced this initiative last year, following growing concerns from creators and talent agencies about the potential misuse of AI technology. The platform began serious testing in December through a pilot program with Creative Artists Agency (CAA), giving several high-profile figures early access to the developing technology.

At the time of the announcement, YouTube emphasized that this collaboration would help “several of the world’s most influential figures have access to early-stage technology designed to identify and manage AI-generated content that features their likeness, including their face, on YouTube at scale.”

Broader Context of YouTube’s AI Strategy

This likeness detection tool exists within a complex landscape where YouTube and its parent company Google are simultaneously developing and promoting AI video generation tools while creating safeguards against their potential misuse. The platform finds itself in the challenging position of both enabling AI creativity and preventing its abuse.

Last March, YouTube implemented additional AI-related policies, including:

- Mandatory labeling for uploads containing AI-generated or significantly altered content

- Strict regulations around AI-generated music that mimics artists’ unique vocal styles

- Enhanced enforcement mechanisms for dealing with synthetic content that could cause harm or mislead viewers

Industry Implications and Future Developments

The introduction of likeness detection technology signals a new era in digital content protection. As AI generation tools become more accessible and sophisticated, platforms face increasing pressure to develop robust systems that protect creators’ rights while maintaining an open ecosystem for legitimate creative expression.

This move by YouTube could set industry standards for how social media platforms handle the complex intersection of AI technology, creator rights, and content moderation. The success or failure of this initiative will likely influence similar developments across other major platforms facing identical challenges with synthetic media.

As the tool evolves and expands to more creators, the entertainment industry will be watching closely to see how effectively it balances protection against false positives, maintains platform usability, and addresses the ever-evolving sophistication of AI-generated content.

Related Articles You May Find Interesting

- Mattel’s Supply Chain Pivot: How Tariff Strategies Reshaped Retailer Relationshi

- Enterprise AI Arms Race Intensifies as Tech Giants Pour Billions Into Next-Gener

- Beyond Automation: How Apple’s AI Philosophy Is Reshaping Workforce Evaluation

- From Fragile to Agile: How Automation Builds Resilient Supply Chains for the Mod

- Amazon Aims for 75% Operations Automation, Potentially Replacing Hundreds of Tho

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

- https://www.youtube.com/watch?v=zVqQiBb0F-w

- https://support.google.com/youtube/answer/16440338

- https://blog.youtube/news-and-events/creative-artists-agency-responsible-ai-tools-for-talent/

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.